How Google Crawl Your Website?

Search

engines are the tools or websites that avail user different multiple solutions

for user defined query. An user defined query is anything e.g. Akhsadainfosystem.com keyword

searched on Google.

Different

search engines use different algorithms to produce the result on user defined

query. Yahoo, Bing, Google is example of search engines. Most popular search

engine is Google in the world.

After

deploying your website on server you will able to generate your presence on

internet. Now use any tool to generate sitemap.xml and load it to your server

where your website is hosted. Now using your credentials create webmaster

account. After creating account claim your your ownership and submit your

sitemap to Google.

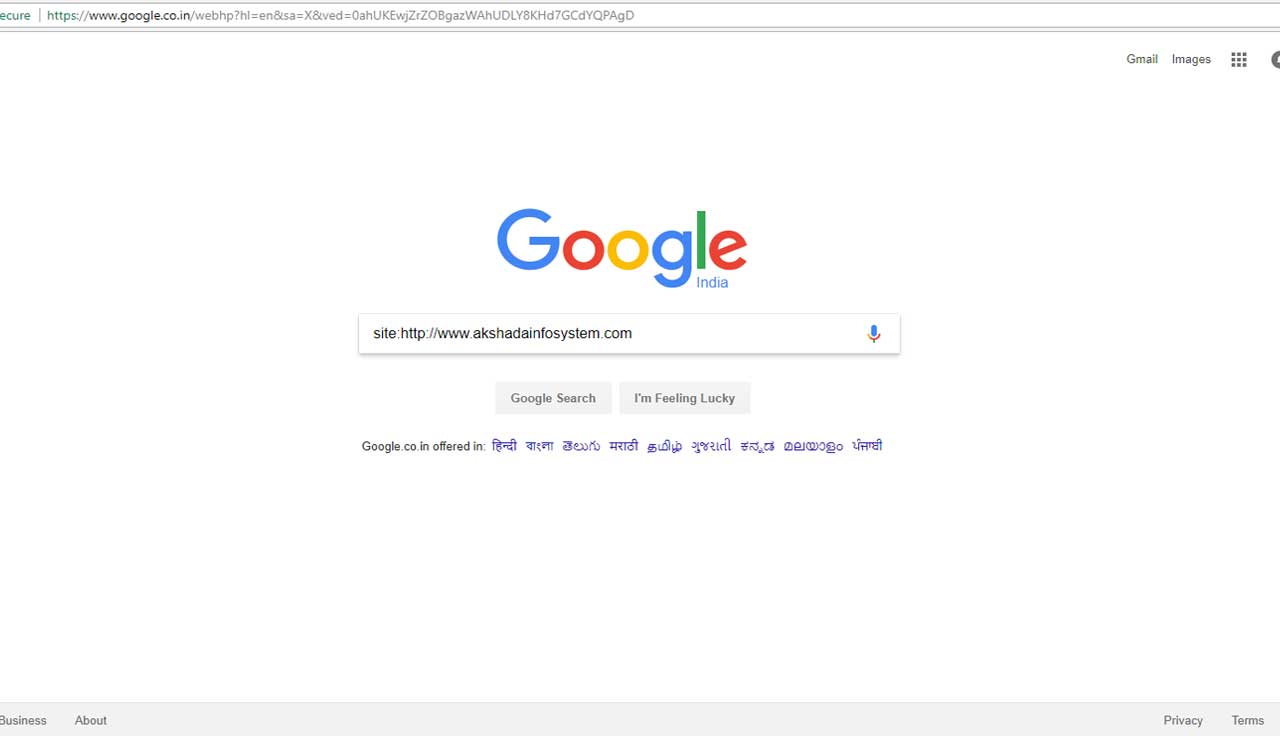

No

one give assurity when your website will be indexed. Generally 3 to 5 days

google index website. After indexing you can view how many urls have been

indexed in google in webmaster or using 'site:domain' e.g. site:http://www.akshadainfosystem.com

in Google search. You will get all links with small description and heading.

Crawling:

Crawling

is the process by which Googlebot discovers new and updated pages to be added

to the Google index.

Google

uses a huge set of computers to fetch (or "crawl") billions of pages

on the web. The program that does the fetching is called Googlebot (also known

as a robot, bot, or spider). Googlebot uses an algorithmic process: computer

programs determine which sites to crawl, how often, and how many pages to fetch

from each site.

Google's

crawl process begins with a list of web page URLs, generated from previous

crawl processes, and augmented with Sitemap data provided by webmasters. As

Googlebot visits each of these websites it detects links on each page and adds

them to its list of pages to crawl. New sites, changes to existing sites, and

dead links are noted and used to update the Google index.

Crawler:

A

crawler is a service or agent that crawls websites. Generally speaking, a

crawler automatically and recursively accesses known URLs of a host that

exposes content which can be accessed with standard web-browsers. As new URLs

are found (through various means, such as from links on existing, crawled pages

or from Sitemap files), these are also crawled in the same way.

Spider trap:

A

spider trap (or crawler trap) is a set of web pages that may intentionally or

unintentionally be used to cause a web crawler or search bot to make an

infinite number of requests or cause a poorly constructed crawler to crash. Web

crawlers are also called web spiders, from which the name is derived. Spider

traps may be created to "catch" spambots or other crawlers that waste

a website's bandwidth. They may also be created unintentionally by calendars

that use dynamic pages with links that continually point to the next day or

year.

Common techniques used are:

·

Creation of

indefinitely deep directory structures like

http://www.akshadainfosystem.com/service/web-developement

·

Dynamic pages

that produce an unbounded number of documents for a web crawler to follow.

Examples include calendars and algorithmically generated language poetry.

·

Documents filled

with a large number of characters, crashing the lexical analyzer parsing the

document.

Indexing:

Indexing

is done by googlebots. Googlebots processes pages which they crawled. Googlebot

processes each of the pages it crawls in order to compile a massive index of

all the words it sees and their location on each page. In addition, they

process information included in key content tags and attributes, such as Title

tags and ALT attributes. Googlebot can process many, but not all, content

types. For example, they cannot process the content of some rich media files or

dynamic pages.

How result is displayed on user

defined query?

When

you input the query google's smart machines search the content which is indexed

in their system and retruns the result that is most relavent to the user. Means

if you have a service - website developement and design then if user input the

query as website development and design company then google will search all

content indexed in their system (simply in database/s) and return the result

viz most relavant to user. This is same as searching something somewhere.

While

showing the result, google has rich datasets so they show results on their

defined standards(factors).Some factors are as follows:

1.

PageRank:

PageRank

is the measure of the importance of a page based on the incoming links from

other pages. In simple terms, each link to a page on your site from another

site adds to your site's PageRank.

There are two types of page rank:

a) High page rank

b) Low page rank

2.

Popularity:

Popular

things can get easily searched. The most search items with maximum hits on

links will be at tht top of search result.